# 19 Feb 2016, 06:10PM: Comparing Codes of Conduct to Copyleft Licenses (My FOSDEM Speech):

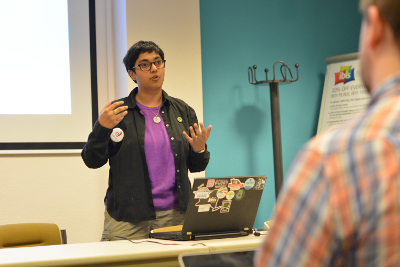

"Comparing codes of conduct to copyleft licenses": written notes for a talk by Sumana Harihareswara, delivered in the Legal and Policy Issues DevRoom at FOSDEM, 31 January 2016 in Brussels, Belgium. Video recording available. Condensed notes available at Anjana Sofia Vakil's blog.

My notes will all be available online after this, so you don't have to scramble to write down my brilliant insights, or, more likely, links. And I don't have any slides. If you really need slides, I'm sorry, and if you're like, YES! then just bask in the next twenty-five minutes.

I will briefly mention my credentials in speaking about this topic, especially since this is my first FOSDEM and many of you don't know me. I have been a participant in free and open source software communities since the late 1990s. I'm the past community manager for MediaWiki, and while at the Wikimedia Foundation, I proposed and implemented our code of conduct, which we call a Friendly Space Policy, for in-person Wikimedia technical spaces such as hackathons and conferences.

I wrote an essay about this topic last year, as a guest post on the social sciences group blog Crooked Timber, and received many thoughtful comments, some of which I'll be citing in this talk.

I am also a contributor to several GPL'd pieces of code, such as MediaWiki and GNU Mailman, on code and non-code levels. And I am the creator of Randomized Dystopia, a GPL'd web application that helps you in case you want to write scifi novels about new dystopian tyrannies that abrogate different rights.

And I have been flamed for suggesting codes of conduct; for instance, one Crooked Timber commenter called me "a wannabe politician, trying to find a way to become important by peddling solutions to non-problems." Which is not as bad as when one person replied to me on a public mailing list and said, "Deja Vue all over again. I finally understand why mankind has been plagued by war throughout its entire history...." So maybe I'm the cause of all wars in human history. But I probably won't be able to cover that today.

So let's start with a basic "theory of change" lens. When you're an activist trying to make change in the world, whether it's via a boycott, a new app, a training session, founding an organization, or some other approach, you have a theory of change, whether it's explicit or implicit. You have an assessment of the way the world is, a vision of how you want the world to look, and a hypothesis about some change you could make, an activity or intervention you could perform to move us closer from A to B. There's a pretty common theory of change among copyleft advocates and a couple of theories of change that are common to code of conduct advocates.

The GPL restricts some software developers' freedom (around redistributing software and around adding code under an incompatible license) so as to protect all users' freedom to use, inspect, modify, and hack on software.

The copyleft theory of change supposes that more people will be more free if we can see, modify, and share the source code to software we depend on, and so it's worth it to prohibit enclosure-style private takeovers of formerly shared code. Because in the long run, this will enable free software developers to build on each others' work, and incentivize other developers to choose to make their software free.

Now, codes of conduct, antiharassment policies, friendly space policies: They restrict some people's behavior and require certain kinds of contributions from beneficiaries, so as to increase everyone's capabilities and freedom in the long run.

One pretty popular theory of change goes like this: we will make better software and have a greater impact if more people, and more different kinds of people, find our communities more appealing to work in. One thing making an unpleasant environment and driving away contributors, especially contributors with perspectives that are underrepresented in our communities, is hurtful misbehavior in community spaces. So we'll make the trade and say that it's worth it to restrict some behavior, in order to make the environment better so more, and more varied, people can do work in our communities, and thus make more free software and make it better.

And here's another related one, very similar to the one above, but focusing on the day-to-day freedom of community participants who are marginalized. If the constraint stopping me from, for instance, speaking in an IRC channel is that I strongly suspect I'll be harassed if they know I'm a woman, and that I don't have any reason to believe I can avoid or usefully complain about that harassment, how free am I to participate in that community? Is there perhaps a way to understand a certain level of safety as a necessary prerequisite to liberty?

I realize that this is probably the one room in the world where I have the highest chance of getting into a multi-hour "what does freedom mean" bikeshedding session, so I'm going to avoid focusing on the second model there and focus more on the first one, which emphasizes the end result of more free software.

So I am not assuming that everyone in this room is a copyleft advocate, but I am going to assume from this point forward that we in this room fundamentally understand the restrictive license argument, that we have a handle on the theory of change that it's operating on. And similarly, I'm sure there are people here who aren't so big on codes of conduct, but I'm going to assume that we fundamentally understand the theory of change behind that approach, regardless.

Now let's talk about similarities. Chris Webber calls both of these approaches "added process which define (and provide enforcement mechanisms for) doing the right thing." I agree. Without this kind of gatekeeping we see free rider incentives, on other people's software work and on other people's attention and patience and emotional labor.

They are written-down formalizations of practices and values that some community members think should be so intuitive and obvious that asking people to formally offer or accept the contract is an insult, or at least an unnecessary inconvenience. And so some people counterpropose sort-of-humorous policies, such as the "Do What the Fuck You Want to" software license and "don't be a jerk" codes of conduct.

They are loci of debate and fragmentation.

Some people agree to them thoughtfully, some agree distractedly as they would to corporate clickthrough EULAs, some disagree but click through anyway (acting in bad faith), some disagree and silently leave, some disagree and negotiate publicly, some disagree and fork publicly. Some people won't show up if the agreement is mandatory; some people won't show up UNLESS it's mandatory; some people don't care either way. And, by the way, good community management requires properly predicting the proportions, and navigating accordingly.

Both copyleft licenses and codes of conduct are approaches to solving problems that became more apparent along with different people realizing they have different expectations and needs, and consider different outcomes or processes to be "fair."

These kinds of codes and licenses usually cover specific bounded events and spaces or sites, and their scope covers interpersonal or public interactions. Codes of conduct usually don't cover conversations outside community-run spaces or the beliefs you hold in your head; open source licenses' restrictions usually kick in on redistribution, not use, so they don't constrain anything you do only on your own computer.

Neither one of these approaches can rely on self-enforcement. There is some self-enforcement of both, of course. There's a perception that -- as Harald K. commented on my blog post -- "licenses more or less police themselves (or in extreme instances, are policed by outsiders) whereas codes of conduct need an internal governing structure, a new arena where political power can be exercised." My personal understanding, which I share with people like Matthew Garrett, is that there's a ton of license-breaking happening, and we need to support existing organizations like the Software Freedom Conservancy to police that misbehavior and litigate to defend the GPL. As Conservancy head Karen Sandler points out in her December essay "From a lawyer who hates litigation", "I've seen companies abuse rights granted to them under the GPL over and over again. As the years pass, it seems that more and more of them want to walk as close to the edge of infringement as they can, and some flagrantly adopt a catch-me-if-you-can attitude." And you see enough individuals in our communities acting similarly that I don't think I need to belabor this point; codes of conduct are much more productive when they're actually, you know, enforced.

And both copyleft licenses and codes of conduct restrict freedom regarding certain acts, over and above what is restricted by the law, in the interest of a long-term good, which can in both cases be construed as greater freedom. As Belle Waring says to one skeptic in the Crooked Timber comments, paraphrasing their argument: "part of your reasonable resentment is, 'I don't want to be forced to do freedom-restricting things in support of a very uncertain outcome, just because the final proposed outcome is a good one.'" I will go into that bit of argument later.

But these kinds of agreements are different on a few different axes, which I think are worth considering for what they tell us about open stuff community values and about our intuitions on what kinds of freedom restrictions we find easier to accept.

One is that many codes of conduct focus on in-person events such as conferences, rather than online interactions. Many of the unpleasant incidents that caused communities to adopt CoCs -- or that communities see as "let's not let that happen here" warning bells -- happen at face-to-face events. And face-to-face spaces have a much longer history and context of ways of dealing with bad behavior than do online spaces. After all, a pretty widespread reading of the core function of government and law enforcement is that they keep Us Good Guys safe by stopping The Bad Guys from committing face-to-face (or knife-to-face or chair-to-face) assault.

But there's another axis I want to explore here: whether the behavior constraint feels like a contract or whether it feels like governance. Of course, we toss around phrases like "the social contract" and use the metaphor of contract to talk about the legitimacy of government, but to an ordinary citizen, contracts and governance feel like significantly different things. To oversimplify: to a non-lawyer like me, something that feels like a contract formalizes a specific trade, something discrete and finite and a bit rare. A copyleft license feels that way to me; it specifies that if I distribute a certain artifact -- which is something I would only do after some amount of thought and work -- I then also undertake certain obligations, namely, I must also redistribute the software's source code, under the same license. And, notwithstanding edge cases, it is often easy to examine the artifact, follow a decision procedure, and determine that I have complied with the terms of the license. If I meant to comply in the first place.

On the other hand, when we make rules constraining acts, especially speech acts, it feels more like governance.

Codes of conduct serve as part of a community's infrastructure to fulfill the first duty of a government — to protect its citizens from harm — and in order to make them work, communities must develop governance processes. That is to say, "governance" is what we call it when we're explicit about who gets to make and implement rules that affect everyone in a community, and how we choose those people or get rid of them. And a governance body does not necessarily have to be a legal entity. For instance, in MediaWiki governance, there's an architecture committee that decides on large technical architectural changes, and it has no standing in the eyes of the United States government.

It takes work to evaluate whether actions have complied with rules, and that work might require asking questions of suspects, bystanders, and targets. Enforcing a code of conduct, even a narrowly scoped anti-harassment policy, often requires that someone act on behalf of a community to do this, and to implement the outcome -- be it informed by retributive, rehabilitative, transformative, or some other justice model. And it feels more like governance than contract to me if a rule applies to actions I take many times a day without deliberate planning -- such as saying something in my project's live internet chat room.

One way of thinking about this is: is there some kind of authority that the community acknowledges as having legitimate power over everyday behavior, over and above existing government with a capital G? Because, again, licenses affect certain coding and architectural decisions, but they don't preclude, for instance, everyday discussion. In fact, the social and digital infrastructure it takes to make robust and usable software, including our bug reports, our automated tests, our conversation on mailing lists, and so on, is often not covered by any particular open license -- if it were, maybe we'd be seeing a different level of pushback even from developers who are happy with copyleft as applied to their code.

But I think another interesting thing that happens when you compare a governance model to a contract model, regarding approaches we take to improving behavior in our communities, is seeing how governance wins. It takes a lot of work, but it has a lot of advantages.

Contracts are binary where ongoing dialogue and governance can be more flexible and responsive. If I were going to be really annoying I would compare them to compiled bytecode and to interpretable scripts. Contracts have to sort of self-contain the tests for what the contract permits, mandates, and prohibits, whereas governance mechanisms and bodies can use more general standards, which might change over time. To quote one of the commenters on my essay, Stephenson-quoter kun:

Sometimes it's the little stuff, more subtle than the booth babe/groping/assault/slur kind of stuff, that makes a community feel inhospitable to me. When I say "little stuff" I am trying to describe the small ways people marginalize each other: dominance displays, cruelty in the guise of honesty, the use of power in inhospitable ways, feeling unvalued, "jokes", clubbiness, watching my every public action for ungenerous interpretation, nitpicking, and bad faith.

Changing these habits requires a change of culture, and that kind of deliberate change in culture requires people who take up the responsibility in stewarding the culture.

And a governance approach has a lot more ability to affect culture than a contracts-only approach does.

As Tim McGovern said in the comments to my Crooked Timber post:

Contracts presume an equality between the parties; in theory, both sides can take a breach of contract to court. In practice, of course, a EULA is a contract that masks radical inequality in power between the parties.... Governance requires wrestling with equality in a real way, on the other hand, and voluntarily submitting to an authority constituted in some fashion (over time, by people, etc.), as opposed to preserving a contractual illusion of equality. I recommend that, if you haven't, you check out the article "Mothering versus Contract" by Virginia Held, from Beyond Self-Interest in 1990. It suggests that perhaps we should fundamentally conceive of our interactions with others as following a paradigm of motherhood rather than of contract -- one truth this approach acknowledges is that by default most interactions in your life are opt-out rather than opt-in, if there's any opting or choice at all.

Yes, there's the freedom to fork. But realistically, if you want to get things done, you have to collaborate with others, and we need to accede to other people's demands, in terms of interface compatibility, learning and speaking fluent English, and all sorts of other needs. A FLOSS project with a thriving ecology of contributors is far more valuable than a nearly identical chunk of code with only a couple of voices available to help out, and thus the finite amount of human attention limits our ability to make effective forks. We're more inderdependent than independent, and acknowleding that as a fundamental truth complicates the contracts-y libertarian narrative potentially beyond usefulness.

I hope that my analysis helps give some vocabulary and frameworks for understanding arguments around these issues, and that we can use them to develop more effective arguments.

The first step might be — if you're trying to get your community to adopt a code of conduct, you might benefit by looking at other freedom-restricting tradeoffs the community is okay with, so you can draw out that comparison.

Or with UX (user experience) -- design is the art of taking things away, and when you're advocating for better user experience, which often involves reducing the number of visible ways to do things, consider comparing your approach to one of the freedom tradeoffs that your interlocutor is already okay with, such as the fact that your community has standardized on a single version control system. A single way for that kind of user to interact.

And if you're trying to build a code of conduct consensus in your community, it might help to start by talking, not about day-to-day beavior, but about artifacts that people think of as artifacts. Talk about the things we make, like slide decks for presentations, articles on your wiki. That can get people on the same page as you, in case they're not yet ready to think of the community itself as an artifact we make together.

If you're an advocate for a new initiative, licensing, code of conduct, or something else, understand your own theory of change, and build mental models to help you understand the people who disagree with you. Understand what part of the theory of change they disagree with, and gather data to counter it.

And, incidentally, this lens will also help you appreciate other complementary approaches that will help you achieve your goals. As Mike Linksvayer says: "Of course I think that copyleft advocates who really want to ensure people have software freedom rather than just being enamored of a hack should be always on the lookout for cheaper and/or socialized enforcement (as implied above, control of distribution channels that matter, and state regulation)."

So why might people oppose codes of conduct? Here are a few ideas:

As Chris Webber notes, "there's an argument that achieving real world social justice involves a certain amount of process, laying the ground for what's permitted and isn't, and (if you have to, but hopefully you don't) a specified direction for requiring compliance with that correct behavior." The addendum is that, as Alberto Brandolini said "The amount of energy necessary to refute bullshit is an order of magnitude bigger than to produce it."

So part of the mental model you're trying to understand is what the person you're arguing with is trying to maximize, and another part is whether you agree on how to maximize it.

Paul Davis, the Ardour BDFL, commented on my Crooked Timber post, "The dilemma for a mid-size project like mine is that the overhead of developing and maintaining a CoC seems like just another thing to do amidst a list of things that is already way too long, and one that addresses a problem that we just don't have (yet)." He said he's more worried about technical, architectural decisions causing developer loss.

So, for instance, you could argue with Paul: what genuinely causes developer loss? And what priorities should you have, given your goals?

CoC adoption drives the adoption of explicit governance mechanisms, as Christie Koehler has recently explored in depth in her post "The complex reality of adopting a meaningful code of conduct" .... but we have many open questions that the legal and policy community within free and open source could really help with.

For instance, it's great that we have people like Ashe Dryden and organizations like Safety First PDX helping develop standards and advising organizers on developing and enforcing codes of conduct, but should we actually be centralizing this kind of reporting, codification and enforcement across the FLOSS ecosystem? Different subcommunities have different needs and standards, but just as OSI has helped us stave off the worst possibilities of license proliferation, maybe we should be avoiding the utter haphazardness of Code of Conduct proliferation.

And -- given how interconnected our projects are -- what if single open source projects are the wrong size or shape or scope for this particular aspect of stewardship and governance?

I'd very much appreciate thoughts on this from other folks in future devroom talks or blog posts -- if you tell me this is the kind of thing we talk about on the FLOSS Foundations mailing list then maybe I'll have to bite the bullet and go ahead and subscribe.

The comments on my Crooked Timber piece had many fine insights, on enforcement, culture, exit, voice and loyalty, fairness, and the consent of the governed. They're worth reading.

In addition to the contract-governance contrast, I think it's also worth thinking about the spectrum of liberty versus hospitality. The free software movement really privileges liberty, way over hospitality. And for many people in our movement, free speech, as John Scalzi put it, is the ability to be a dick in every possible circumstance. Criticize others in any words we like, and do anything that is not legally prohibited.

Hospitality, on the other hand, is thinking more about right speech, just speech, useful speech, and compassion. We only say and do things that help each other. The first responsibility of every citizen is to help each other achieve our goals, and make each other happy.

I think these two views exist on a spectrum, and we are way over to one side, the liberty side, as a community, and moving closer to the middle would help everyone learn better and would help us keep and grow our contributor base, and help make it more diverse. And to the extent that comparing codes of conduct to copyleft licenses helps some people put new initiatives in perspective, balancing the relationship between rights and responsibilities, perhaps that can also help shift our culture into one that's more willing to be hospitable. I hope.

William Timberman said in Crooked Timber comments, "how does a socialist persuade a libertarian that coherence and the common good is sometimes a legitimate constraint on individual freedom?" And the answer is that I don't know, but I hope it is a soluble problem, and I hope I've opened up some avenues for exploration on that topic. Thank you.

Good afternoon. I'm Sumana Harihareswara, and I represent myself, and my firm Changeset Consulting. I'm here to discuss some things we can learn from comparing antiharassment policies, or community codes of conduct, to copyleft software licenses such as the GPL. I'll be laying out some major similarities and differences, especially delving into how these different approaches give us insight about common community attitudes and assumptions. And I'll lay out some lessons we can apply as we consider and advocate various sides of these issues, and potentially to apply to some other topics within free and open source software as well.

Good afternoon. I'm Sumana Harihareswara, and I represent myself, and my firm Changeset Consulting. I'm here to discuss some things we can learn from comparing antiharassment policies, or community codes of conduct, to copyleft software licenses such as the GPL. I'll be laying out some major similarities and differences, especially delving into how these different approaches give us insight about common community attitudes and assumptions. And I'll lay out some lessons we can apply as we consider and advocate various sides of these issues, and potentially to apply to some other topics within free and open source software as well.

I. Credibility

II. The basic comparison

A. GPL

B. Codes of Conduct

C. Assumptions

D. Similarities

E. Differences

F. Shortcomings of the contract model

1. Flexibility

contracts explicitly restrict acts which are simply unpardonable -- not sharing the source code to your modified version of a GPL-licensed project, sexually assaulting someone at a conference -- because everyone agrees that those things are wrong and we feel confident that we can agree up-front that there can never be any extenuating circumstances in which those things are actually OK. Governance, however, can serve to 'nudge' people away from bad behaviours – poor coding standards, rudeness on mailing lists -- by giving us a standard to measure those things against without enumerating every possible violation of the standard. A governance procedure can take context into account, and is much more easily subject to improvement and revision than a contract is.

2. Contracts give us an illusion of equality and self-containedness

contracts have taken over as a primary way of negotiating relationships: a EULA is a replacement for a legal understanding of the relationship between two parties who are doing business. I don't, in other words, sign a EULA when I buy a pair of socks -- or even when I buy a car (Teslas excepted) because the purchase relationship is legally defined; even the followup on what can and can't be in your warranty is legally defined. But companies would rather be bound by an agreement they write than a body of law based on either commonlaw or constitutional concepts, or legislation.

3. Contract pretends you have choices

III. Lessons

A. Freedom tradeoff comparisons

B. Artifacts

C. Theory of change

D. A fresh set of governance needs and questions

IV. Other thoughts + Conclusion

A. Comments on my CT piece

B. Hospitality to liberty spectrum

C. This feels like a potentially insoluble problem

# 19 Feb 2016, 06:50PM: What Should We Stop Doing? (FLOSS Community Metrics Meeting keynote):

"What should we stop doing?": written version of a keynote address by Sumana Harihareswara, delivered at the FLOSS Community Metrics Meeting just before FOSDEM, 29 January 2016 in Brussels, Belgium. Slide deck is a 14-page PDF. Video is available. The notes I used when I delivered the talk were quite skeletal, so the talk I delivered varied substantially on the sentence level, but covered all the same points.

So today I want to talk with you about how to use the power you have, in your open source projects and organizations, and about saying no to a lot of things, so you can focus on doing fewer things well -- the Unix philosophy, right? I'll talk about a few tools and leave you with some questions.

The lamppost fallacy is an old one, and the story goes that a drunk guy says, "I dropped my keys, will you help me look for them?" "OK, sure. Where'd you drop them?" "Under that tree." "So why are you looking for them under this lamppost?" "Well, the light is better here."

The first place we ought to check for the lamppost fallacy is in overvaluing quantitative metrics over qualitative analysis when looking at developer workflow and experience. Dave Neary said, in the FLOSSMetrics meeting in 2014, in "What you measure is what you get. Stories of metrics gone wrong": Use qualitative and quantitative analysis to interpret metrics.

When it comes to developer experience, you can be analytical while both quantitative and qualitative. And you rather have to be, because as soon as you start uncovering numbers, you start asking why they are what they are and what could be done to change that, and that's where the qualitative analytical approach comes in.

Qualitative is still analytical! Camille Fournier's post, "Qualitative or quantitative but always analytical", goes into this:

This is applicable to developer experience as well!

For help, I recommend the Wikimedia movement's Grants Evaluation & Learning team's table discussing quantitative and qualitative approaches you can take: ethnography, case studies, participant observation, and so on. To deepen understanding. It's complementary with the quantitative side, which is about generalizing findings.

Another place to check for the lamppost fallacy is in overvaluing quantifiable data about programming artifacts and process over all sorts of data about everything else that matters about your project. Earlier today, Jesus González-Barahona mentioned the many communities -- dev, contributor, user, larger ecosystem -- that you might want to research. There's lots of easily quantifiable data about development, yes, but what is actually important to your project? Dev, user, sysadmin, larger ecology -- all of these might be, honestly, more important to the success of your mission. And we also know some things about how to get better at getting user data.

For help, I recommend the Simply Secure guides on doing qualitative UX research, such as seeing how users are using your product/application. And I recommend you read existing research on software engineering, like the findings in Making Software: What Really Works and Why We Believe It, the O'Reilly book edited by Andy Oram and Greg Wilson.

Another really important tool that will help you say no to some things and yes to others is knowing what kind of assessment you're trying to make, and how that plays into your hypothesis, your theory of change.

I'm going to mess this up compared to a serious education researcher, but it's worth knowing the basics of the difference between formative and summative assessments.

Formative assessment or evaluation is diagnostic, and you should use it iteratively to make better decisions to help students learn with better instruction & processes.

Summative assessment is checking outcomes at the conclusion of an exercise or a course, often for accountability, and judging the worth/value of that educational intervention. In our context as open source community managers, this often means that this data is used to persuade bosses & community that we're doing a good job or that someone else is doing a bad job.

As Dawn Foster last year said in her "Your Metrics Strategy" speech at the FLOSSMetrics meeting:

Here's Ioana Chiorean, FLOSS Community Metrics meeting, January 30th 2015, "How metrics motivate":

Dave Neary in 2014 in "What you measure is what you get. Stories of metrics gone wrong" at the Metrics meeting said:

And this is tough. Because it can be hard to really make a space for truly formative assessment, especially if you are doing everything transparently, because as soon as you gather and publish any data, people will use it to argue that we ought to make drastic changes, not just iterative changes. But it might help to remember what you are truly aiming at, what kind of evaluation you really mean to be doing.

And it helps a lot to know your Theory of Change. You have an assessment of the way the world is, a vision of how you want the world to look, and a hypothesis about some change you could make, an activity or intervention you could perform to move us closer from A to B.

There's a chicken and egg problem here. How do you form the hypothesis without doing some initial measurement? And my perhaps subversive answer is, use ideas from other communities and research to create a hypothesis, and then set up some experiments to check it. Or go with your gut, your instinct about what the hypothesis is, and be ready to discard it if the data does not bear it out.

For help: Check out educational psychology, such as cognitive apprenticeship theory - Mel Chua's presentation here gives you the basics. You might also check out the Program/Grant Learning & Evaluation findings from Wikimedia, and try out how the "pirate metrics" funnel -- Acquisition, Activation, Retention, Referral, Revenue, or AARRR -- fits with your community's needs and bottlenecks.

And the third tool is that when we see data saying that something does not work, we need to have the courage to acknowledge what the data is saying. You can move the goalposts, or you can say no and cause some temporary pain. We have to be willing to take bug reports.

Here's an example. The Wikimedia movement likes to host editathons, where a bunch of people get together and learn to edit Wikipedia together. We hoped that would be a way to train and retain new editors. But Wikipedia editathons don't produce new long-term editors. We learned:

And, in "What we learned from the English Wikipedia new editor pilot in the Philippines":

Providing suggestions via links to places users might go for help did not appear to sufficiently support or motivate these new editors to get involved. 50 percent of those surveyed later said they didn’t look for help pages. Those who did view help pages nevertheless did not edit the suggested articles. But over and over in the Wikimedia movement I see that we keep hosting those one-off editathons. And they do work to, for instance, add new high-quality content about the topics they focus on, and some people really like them as parties and morale boosters, and I've heard the argument that they at least get a lot of people through that first step, of creating an account and making their first edit. But that does not mean that they're things we should be spending time on, to reverse the editor decline trend. We need to be honest about that.

It can be hard to give up things we like doing, things we think are good ideas and that ought to work. As an example: I am very much in favor of mentorship and apprenticeship programs in open source, like Google Summer of Code and Outreachy. Recently some researchers, Adriaan Labuschagne and Reid Holmes, raised questions about mentorship programs in "Do Onboarding Programs Work?", published in 2015, about whether these kinds of mentorship programs move the needle enough in the long run, to bring new contributors in. It's not conclusive, but there are questions. And I need to pay attention to that kind of research and be willing to change my recommendations based on what actually works.

We can run into cognitive dissonance if we realize that we did something that wasn't actually effective. Why did I do this thing? why did we do this thing? There's an urge to rationalize it. The Wikimedia FailFest & Learning Pattern hackathon 2015 recommends that we try framing our stories about our past mistakes to avoid that temptation.

Little 'f' failure framing:

For help with this tool, I suggest reading existing research evaluating what works in FLOSS and open culture, like "Measuring Engagement: Recommendations from Audit and Analytics" by David Eaves, Adam Lofting, Pierros Papadeas, Peter Loewen of Mozilla.

I have a much larger question to leave you with.

One trend I see underlying a big chunk of FLOSS metrics work is the desire to automate the emotional labor involved in maintainership, like figuring out how our fellow contributors are doing, making choices about where to spend mentorship time, and tracking a community's emotional tenor. But is that appropriate? What if we switched our assumptions around and used our metrics to figure out what we're spending time on more generally, and tried to find low-value programming work we could stop doing? What tools would support this, and what scenarios could play out?

This is a huge question and I have barely scratched the surface, but I would love to hear your thoughts. Thank you.

Sumana Harihareswara, Changeset Consulting I'd like to start with a story, about my excellent boss I worked for when I was at the Wikimedia Foundation, Rob Lanphier, and what he told me when I'd been on the job about eight months. In one of our one-on-one meetings, I mentioned to him that I felt overwhelmed. And first, he told me that I'd been on the job less than a year, and it takes a year to ramp up fully in that job, so I shouldn't be too worried. And then he reminded me that we were in an amazing position, that we would hear and get all kinds of great ideas, but that in order to get anything done, we would have to focus. We'd have to learn to say, "That's a great idea, and we're not doing it." And say it often. And, he reminded me, I felt overwhelmed because I actually had the power to make choices, about what I did with my time, that would affect a lot of people. I was not just cog # 15,000 doing a super specialized task at Apple.

I'd like to start with a story, about my excellent boss I worked for when I was at the Wikimedia Foundation, Rob Lanphier, and what he told me when I'd been on the job about eight months. In one of our one-on-one meetings, I mentioned to him that I felt overwhelmed. And first, he told me that I'd been on the job less than a year, and it takes a year to ramp up fully in that job, so I shouldn't be too worried. And then he reminded me that we were in an amazing position, that we would hear and get all kinds of great ideas, but that in order to get anything done, we would have to focus. We'd have to learn to say, "That's a great idea, and we're not doing it." And say it often. And, he reminded me, I felt overwhelmed because I actually had the power to make choices, about what I did with my time, that would affect a lot of people. I was not just cog # 15,000 doing a super specialized task at Apple.

Tool 1: Remember to say no to the lamppost fallacy

A. Quantitative vs qualitative in the dev data

qualitative is still analytical. You may not be able to use data-driven reasoning because you're starting something new, and there are no numbers. It is hard to do quantitative analysis without data, and new things only have secondary data about potential and markets, they do not have primary data about the actual user engagement with the unbuilt product that you can measure. Furthermore, even when the thing is released, you probably have nothing but "small" data for a while. If you only have a thousand people engaging with something, it is hard to do interesting and statistically significant A/B tests unless you change things drastically and cause massive behavioral changes.

B. Quantifiable dev artifacts-and-process data versus data about everything else

Tool 2: know what kind of assessment you're trying to do and how it plays into your theory of change

METRICS ARE USEFUL Measure progress, spot trends and recognize contributors.

Start with goals: WHY FOCUS ON GOALS? Avoid a mess: measure the right things, encourage good behavior.Measure the right things... specific goals that will contribute to your organization's success

be careful what you measure: metrics create incentives

Focus on business and community's success measurementsTool 3: if something doesn't work, acknowledge it

About 52% of participants identified as new users made at least one edit one month after their event, but the percentage editing dropped to 15% in the sixth months after their event

Inviting contribution by surfacing geo-targeted article stubs was not enough to motivate or help users to make their first edits to an article. Together, all new editors who joined made only six edits in total to the article space during this experiment, and they made no edits to the articles we suggested.

Big 'F' failure framing:

Priorities

![Wikimedia Hackathon 2013, by Sebastiaan ter Burg from Utrecht, The Netherlands [CC BY 2.0 (http://creativecommons.org/licenses/by/2.0)], via Wikimedia Commons Wikimedia Hackathon 2013, by Sebastiaan ter Burg from Utrecht, The Netherlands [CC BY 2.0 (http://creativecommons.org/licenses/by/2.0)], via Wikimedia Commons](https://upload.wikimedia.org/wikipedia/commons/thumb/1/1c/Wikimedia_Hackathon_2013_-_Flickr_-_Sebastiaan_ter_Burg_%2823%29.jpg/256px-Wikimedia_Hackathon_2013_-_Flickr_-_Sebastiaan_ter_Burg_%2823%29.jpg)

![Wikimedia Foundation all-hands meeting 2015, by Myleen Hollero (Wikimedia Foundation) [CC BY-SA 3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons Wikimedia Foundation all-hands meeting 2015, by Myleen Hollero (Wikimedia Foundation) [CC BY-SA 3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons](https://upload.wikimedia.org/wikipedia/commons/thumb/4/4b/2015-wiki-allhands-group-0004.jpg/256px-2015-wiki-allhands-group-0004.jpg)